Code Repository: GitLab

Click on the images to enlarge.

Visual SLAM Practice (In Progress)

Localisation • SLAM • g2o • pangolin • Python

Introduction

Ever since I did AI Class 2011 back in high school, I wanted to implement SLAM. I even tried to make a Lego NXT robot map the environment using a single ultrasonic sensor back then. I have approached this goal, but sadly, did not succeed, during my honours project. This is my next attempt.

A secondary goal is to learn how to use Bundle Optimizers. I want to figure out how much it improves the trajectory, or if it can’t work without one at all. For now, I’m using g2o.

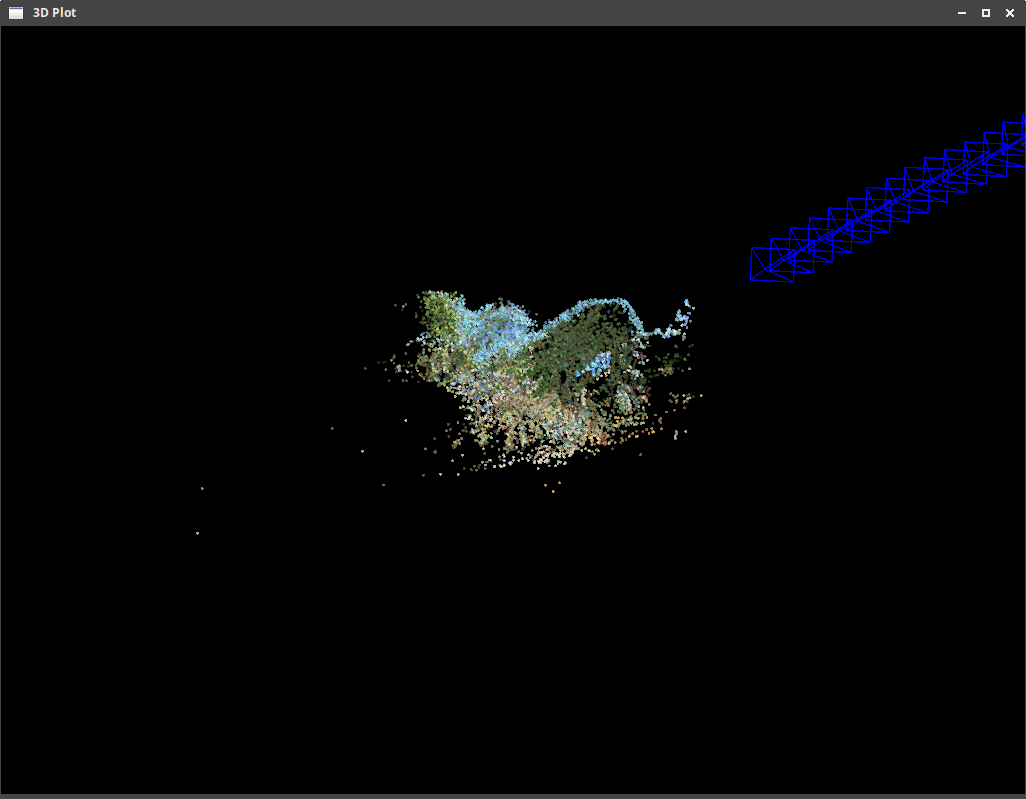

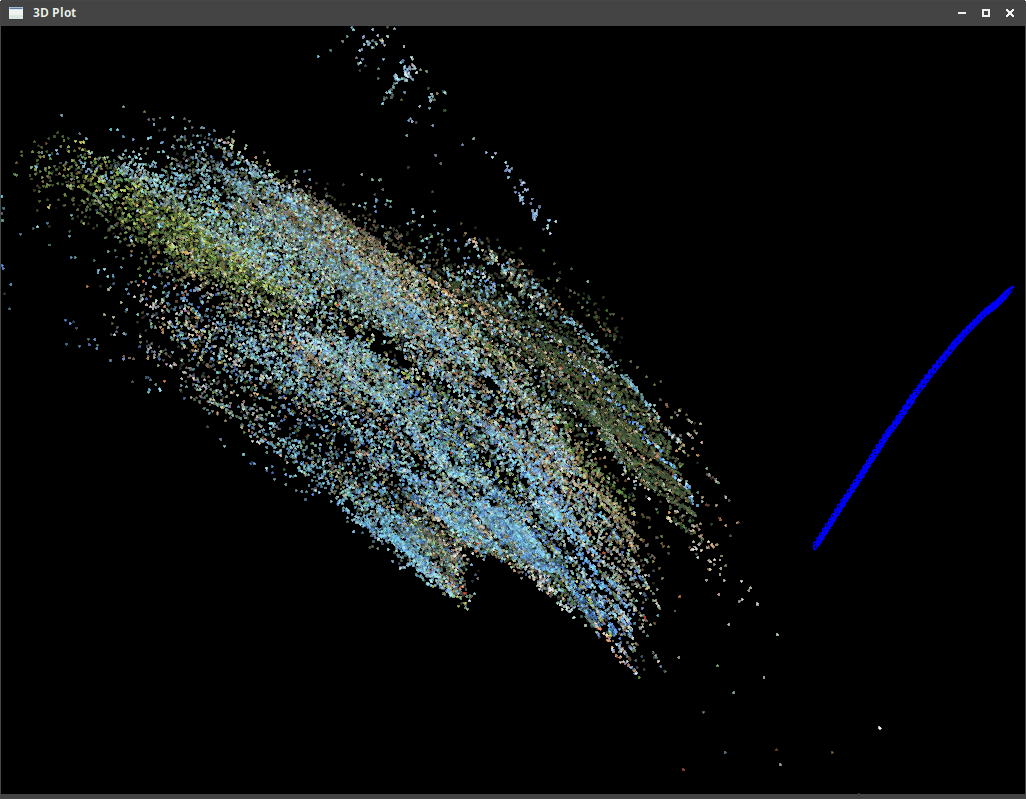

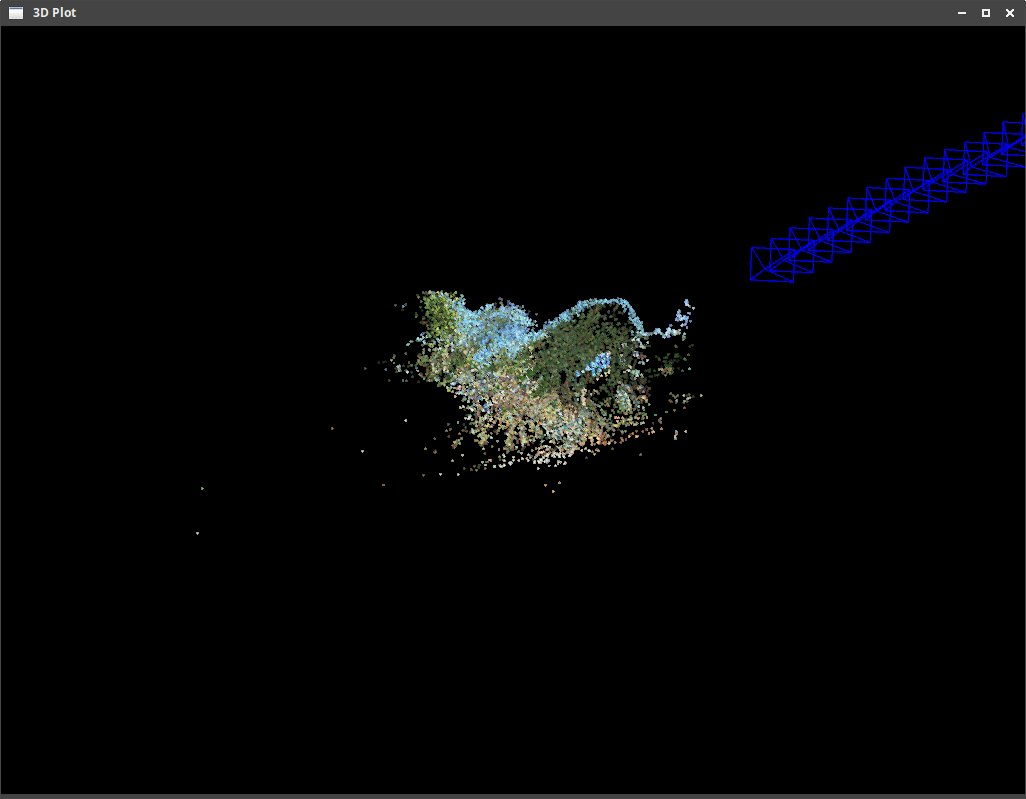

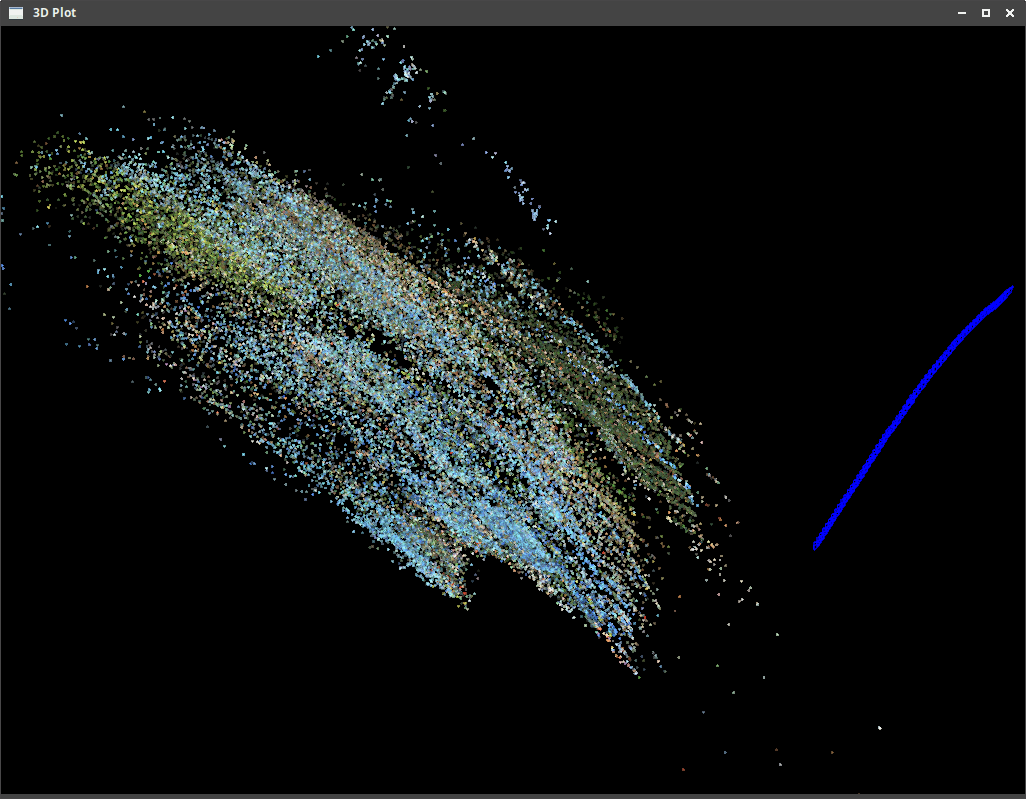

I want to make a car build a map and localise itself using either monocular or binocular vision. For this, I’m attempting to use ORB features and descriptors, triangulate 3D points and poses, then use g2o to refine the locations, and finally, plot everything in 3D.

Currently I have the plotting g2o and (pangolin) ready, but cannot test it, because of issues with 3D point triangulation.

I hope to find the time to work on this project more in the near future.

Disclaimer: This is heavily inspired by George Hotz’s Live SLAM coding session. I am using his code and thinking as reference.

- Category: Projects

- Date: December 2018 - present